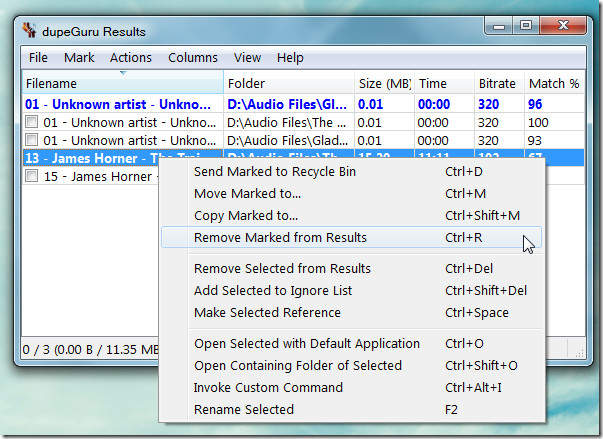

I think I used DupeGuru quite a while ago, and it was OK.īut more recently I've used PhotoSweeper, but I think it's macOS only. Is there a tool better than DupeGuru that will allow me to say that even though the date/time matches, I want the largest file and the most metadata? I kept sidecar files that contain metadata, such as json and txt files, just in the event I might want the extra image metadata they contain ini files - how does this stuff get in there over 25 years? Has had all the crud taken out - MS Word files, PDF's, spreadsheets. Consolidated in one folder, with countless sub folders I keep as they sometimes provide good context about the photos they contain But, I then learned by checking, that some of the files I was choosing didn't have the best "Date" metadata, or any at all. I found that DupeGuru can list file matches on EXIF data, and in the results, I can choose the largest file in each bunch, in hopes of keeping the photos with the most image data. In a previous attempt to de-dupe, I used DupeGuru to de-dupe and what I found is that what constitutes a "match" may not take everything I need into consideration. I have a 100k photo library collected from different cameras and downloaded from Google Photos. I am using Debian Jessie, I installed dupeGuru from the apt repository.This is a slightly deeper question than simply finding dupes. dupeguru save file is ~50MB, so that just doesn't seem right. One reboot later I try to load the results so I can continue my manual parsing (there are many duplicate directories that have simply been saved to the same place twice and I am manually merging those directories) but all I get is a blank 'dueGuru Results' screen with zero files.

The second time I was wiser and saved the results and then checked that the results were saved before I did anything else. I can't be the only person who wants to use this program on large datasets). I cried a little on the inside when I rebooted and then waited another two days for the scan to scan again (thinking about this, the default behaviour should REALLY be to export to an html FILE ON DISK, not to pop open a browser instance. The first time I did this I accidentally hit 'export as html' and my machine became unresponsive as it tried to give that list to Firefox. After ~2days it gave me a huge list (~150k doubles representing ~850GB) which I expected since I pulled my NAS to these drives and I knew I had done multiple backups of various things at various times to various places. I installed dupeGuru and set it to work on thre 6TB drives. I googled this and found reference to several other people experiencing the same thing (here: ) but nop solution.

0 kommentar(er)

0 kommentar(er)